前情提要

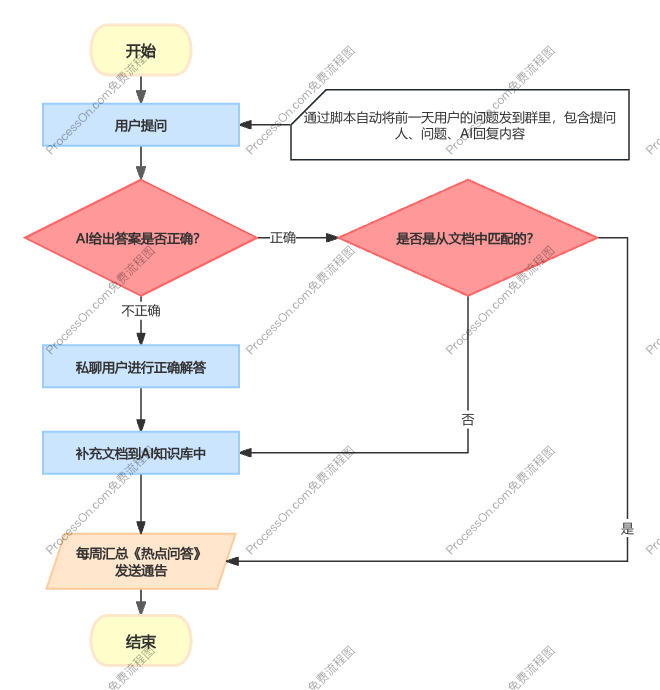

之前使用的Qwen-1.8B-Chat大模型、开源知识库项目FastGPT以及对接大模型API的One API进行本地搭建的大模型系统已经运行了一段时间,主要用途为导入大量应急知识以及漏洞知识自动回答一线师傅的问题。并完善了一个知识库运营体系:

运营过程中,针对漏洞及应急常识回答较好。

由于没有解除模型自带的道德限制,偶尔会出现回答“我不能为您解答漏洞/免杀等相关内容”的情况。

另外一些针对性功能性特定性的问题回答较差,例如本文下面要写的:通过大模型生成天眼查询语句。通过测试,在知识库中传入查询语句编写规则后大模型无法理解并输出正确的查询语句,在知识库中传入一些示例问答也无法很好的解决。于是开始通过尝试微调进行解决。

什么是微调

微调(Fine-tuning)是指在预训练模型的基础上进行进一步训练,以适应特定任务或领域需求的过程。通过微调,可以利用少量特定任务的数据,对预训练模型进行调整,使其在该任务上的表现更加优异。微调的步骤通常包括:1. 加载预训练模型;2. 准备特定任务的数据集;3. 训练模型;4. 验证和调整。

通俗意义来讲,微调是通过数据集对某一个特定知识进行针对性训练。用一个人的技能学习来同步比喻,微调的步骤为:1. 找这个人过来;2. 拿到乘法口诀表;3. 通过背诵的方式进行训练4. 提问有没有学会。再用这个例子来说明,知识库的方式就是提问之后在书里现查。

在微调中使用最多的是低秩自适应技术即LoRA。微调大语言模型需要大量的显存占用,LoRA的核心意义在于用时间换空间,即以运行时间增长为代价,节省内存。下午中的微调也采用的是LoRA方法。

对Qwen-1.8B-Chat进行微调

加载预训练模型

首先下载Qwen存储库:

!git clone https://github.com/QwenLM/Qwen.git返回:

正克隆到 'Qwen'...

remote: Enumerating objects: 1717, done.

remote: Counting objects: 100% (791/791), done.

remote: Compressing objects: 100% (289/289), done.

remote: Total 1717 (delta 600), reused 576 (delta 487), pack-reused 926

接收对象中: 100% (1717/1717), 35.82 MiB | 11.26 MiB/s, 完成.

处理 delta 中: 100% (1014/1014), 完成.移动到下载的目录中,并查看当前目录:

%cd Qwen

import os

print("当前工作目录:", os.getcwd())返回:

当前工作目录: /mnt/workspace/Qwen导入Qwen-1.8B-Chat模型:

!git clone https://modelscope.cn/qwen/Qwen-1_8B-Chat.git返回:

正克隆到 'Qwen-1_8B-Chat'...

remote: Enumerating objects: 113, done.

remote: Counting objects: 100% (113/113), done.

remote: Compressing objects: 100% (93/93), done.

remote: Total 113 (delta 33), reused 81 (delta 17), pack-reused 0

接收对象中: 100% (113/113), 16.18 MiB | 47.75 MiB/s, 完成.

处理 delta 中: 100% (33/33), 完成.

过滤内容: 100% (2/2), 3.42 GiB | 171.02 MiB/s, 完成.准备特定任务的数据集

生成500份训练数据,主要是查询源IP和目录IP的语句:

import json

import random

import pandas as pd

# 生成随机 IP 地址的函数

def generate_random_ip():

return f"{random.randint(0, 255)}.{random.randint(0, 255)}.{random.randint(0, 255)}.{random.randint(0, 255)}"

# 一些示例自然语言查询

queries = [

"查询源IP为{sip}和目的IP为{dip}的日志。",

"我想知道从{sip}到{dip}的日志信息。",

"请帮我查询源IP是{sip},目的IP是{dip}的日志。",

"查找源IP为{sip}的日志,并且目的IP为{dip}。",

"请查询从IP地址{sip}到{dip}的日志。",

"我要查看源IP为{sip}和目的IP为{dip}的所有日志记录。",

"帮我检索源IP是{sip},目的IP是{dip}的日志。",

"找出源IP为{sip}且目的IP为{dip}的日志信息。"

]

# 生成数据集

data = []

for i in range(500):

sip = generate_random_ip()

dip = generate_random_ip()

while sip == dip: # 确保源IP和目的IP不相同

dip = generate_random_ip()

natural_query = random.choice(queries).format(sip=sip, dip=dip)

tianyan_query = f"sip:({sip}) AND dip:({dip})"

conversations = [

{

"from": "user",

"value": natural_query

},

{

"from": "assistant",

"value": tianyan_query

}

]

data.append({

"id": f"identity_{i}",

"conversations": conversations

})

# 保存为JSONL文件

with open("tianyan_queries_dataset.jsonl", "w", encoding="utf-8") as f:

for entry in data:

json.dump(entry, f, ensure_ascii=False)

f.write('n')

print("数据集生成完毕并已保存为tianyan_queries_dataset.jsonl")

# 从JSONL文件读取数据并转换成所需格式

file_path = 'tianyan_queries_dataset.jsonl'

# 读取文件并解析JSON

data = []

with open(file_path, 'r', encoding='utf-8') as file:

for line in file:

data.append(json.loads(line))

# 解析对话数据

all_chat = []

for entry in data:

conversations = entry["conversations"]

user = next(item["value"] for item in conversations if item["from"] == "user")

assistant = next(item["value"] for item in conversations if item["from"] == "assistant")

chat = {

"id": entry["id"],

"conversations": [

{"from": "user", "value": user},

{"from": "assistant", "value": assistant}

]

}

all_chat.append(chat)

# 保存为所需格式的JSON文件

output_file_path = 'chat.json'

with open(output_file_path, 'w', encoding='utf-8') as json_file:

json.dump(all_chat, json_file, ensure_ascii=False, indent=4)

print("数据集生成完毕并已保存为chat.json")返回:

数据集生成完毕并已保存为tianyan_queries_dataset.jsonl

数据集生成完毕并已保存为chat.json训练模型

利用模型脚本利用lora开始进行微调:

!export CUDA_DEVICE_MAX_CONNECTIONS=1

!export CUDA_VISIBLE_DEVICES=0

!python finetune.py

--model_name_or_path Qwen-1_8B-Chat

--data_path chat.json

--output_dir output_qwen

--num_train_epochs 1

--per_device_train_batch_size 2

--per_device_eval_batch_size 1

--gradient_accumulation_steps 8

--evaluation_strategy "no"

--save_strategy "steps"

--save_steps 1000

--save_total_limit 10

--learning_rate 3e-4

--weight_decay 0.1

--adam_beta2 0.95

--warmup_ratio 0.01

--lr_scheduler_type "cosine"

--logging_steps 1

--report_to "none"

--model_max_length 512

--lazy_preprocess True

--gradient_checkpointing

--use_lora返回:

[2024-06-12 09:45:08,470] [INFO] [real_accelerator.py:203:get_accelerator] Setting ds_accelerator to cuda (auto detect)

df: /root/.triton/autotune: 没有那个文件或目录

[WARNING] Please specify the CUTLASS repo directory as environment variable $CUTLASS_PATH

[WARNING] NVIDIA Inference is only supported on Ampere and newer architectures

[WARNING] sparse_attn requires a torch version >= 1.5 and < 2.0 but detected 2.3

[WARNING] using untested triton version (2.3.1), only 1.0.0 is known to be compatible

2024-06-12 09:45:13.845838: I tensorflow/core/platform/cpu_feature_guard.cc:210] This TensorFlow binary is optimized to use available CPU instructions in performance-critical operations.

To enable the following instructions: AVX2 FMA, in other operations, rebuild TensorFlow with the appropriate compiler flags.

2024-06-12 09:45:15.053732: W tensorflow/compiler/tf2tensorrt/utils/py_utils.cc:38] TF-TRT Warning: Could not find TensorRT

/usr/local/lib/python3.10/site-packages/transformers/deepspeed.py:23: FutureWarning: transformers.deepspeed module is deprecated and will be removed in a future version. Please import deepspeed modules directly from transformers.integrations

warnings.warn(

/usr/local/lib/python3.10/site-packages/transformers/training_args.py:1474: FutureWarning: `evaluation_strategy` is deprecated and will be removed in version 4.46 of 🤗 Transformers. Use `eval_strategy` instead

warnings.warn(

The model is automatically converting to bf16 for faster inference. If you want to disable the automatic precision, please manually add bf16/fp16/fp32=True to "AutoModelForCausalLM.from_pretrained".

Loading checkpoint shards: 100%|██████████████████| 2/2 [00:00<00:00, 2.78it/s]

trainable params: 53,673,984 || all params: 1,890,502,656 || trainable%: 2.8391

Loading data...

Formatting inputs...Skip in lazy mode

Detected kernel version 4.19.91, which is below the recommended minimum of 5.5.0; this can cause the process to hang. It is recommended to upgrade the kernel to the minimum version or higher.

You are using an old version of the checkpointing format that is deprecated (We will also silently ignore `gradient_checkpointing_kwargs` in case you passed it).Please update to the new format on your modeling file. To use the new format, you need to completely remove the definition of the method `_set_gradient_checkpointing` in your model.

0%| | 0/31 [00:00<?, ?it/s]/usr/local/lib/python3.10/site-packages/torch/utils/checkpoint.py:464: UserWarning: torch.utils.checkpoint: the use_reentrant parameter should be passed explicitly. In version 2.4 we will raise an exception if use_reentrant is not passed. use_reentrant=False is recommended, but if you need to preserve the current default behavior, you can pass use_reentrant=True. Refer to docs for more details on the differences between the two variants.

warnings.warn(

{'loss': 1.2988, 'grad_norm': 0.56640625, 'learning_rate': 0.0003, 'epoch': 0.03}

{'loss': 1.3076, 'grad_norm': 0.6171875, 'learning_rate': 0.00029917828430524096, 'epoch': 0.06}

{'loss': 1.0557, 'grad_norm': 0.484375, 'learning_rate': 0.0002967221401100708, 'epoch': 0.1}

...

/usr/local/lib/python3.10/site-packages/peft/utils/other.py:611: UserWarning: Unable to fetch remote file due to the following error (MaxRetryError("HTTPSConnectionPool(host='huggingface.co', port=443): Max retries exceeded with url: /Qwen-1_8B-Chat/resolve/main/config.json (Caused by NewConnectionError('<urllib3.connection.HTTPSConnection object at 0x7f113cf41f90>: Failed to establish a new connection: [Errno 101] Network is unreachable'))"), '(Request ID: 4c977f93-02cb-460a-af40-b66731aa8c8e)') - silently ignoring the lookup for the file config.json in Qwen-1_8B-Chat.

warnings.warn(

/usr/local/lib/python3.10/site-packages/peft/utils/save_and_load.py:195: UserWarning: Could not find a config file in Qwen-1_8B-Chat - will assume that the vocabulary was not modified.

warnings.warn(

Output is truncated. View as a scrollable element or open in a text editor. Adjust cell output settings...验证和调整

将训练完成的模型进行合并:

from peft import AutoPeftModelForCausalLM

from transformers import AutoTokenizer

model = AutoPeftModelForCausalLM.from_pretrained(

"output_qwen",

device_map="auto",

trust_remote_code=True

).eval()

merged_model = model.merge_and_unload()

merged_model.save_pretrained("qwen-1_8b-finetune", max_shard_size="2048MB", safe_serialization=True)

tokenizer = AutoTokenizer.from_pretrained(

"output_qwen",

trust_remote_code=True

)

tokenizer.save_pretrained("qwen-1_8b-finetune")返回:

/usr/local/lib/python3.10/site-packages/tqdm/auto.py:21: TqdmWarning: IProgress not found. Please update jupyter and ipywidgets. See https://ipywidgets.readthedocs.io/en/stable/user_install.html

from .autonotebook import tqdm as notebook_tqdm

2024-06-12 09:56:21.076395: I tensorflow/core/platform/cpu_feature_guard.cc:210] This TensorFlow binary is optimized to use available CPU instructions in performance-critical operations.

To enable the following instructions: AVX2 FMA, in other operations, rebuild TensorFlow with the appropriate compiler flags.

2024-06-12 09:56:22.504679: W tensorflow/compiler/tf2tensorrt/utils/py_utils.cc:38] TF-TRT Warning: Could not find TensorRT

The model is automatically converting to bf16 for faster inference. If you want to disable the automatic precision, please manually add bf16/fp16/fp32=True to "AutoModelForCausalLM.from_pretrained".

Loading checkpoint shards: 100%|██████████| 2/2 [00:01<00:00, 1.11it/s]

[2024-06-12 09:56:33,944] [INFO] [real_accelerator.py:203:get_accelerator] Setting ds_accelerator to cuda (auto detect)

[WARNING] Please specify the CUTLASS repo directory as environment variable $CUTLASS_PATH

[WARNING] NVIDIA Inference is only supported on Ampere and newer architectures

[WARNING] sparse_attn requires a torch version >= 1.5 and < 2.0 but detected 2.3

[WARNING] using untested triton version (2.3.1), only 1.0.0 is known to be compatible

('qwen-1_8b-finetune/tokenizer_config.json',

'qwen-1_8b-finetune/special_tokens_map.json',

'qwen-1_8b-finetune/qwen.tiktoken',

'qwen-1_8b-finetune/added_tokens.json')用10条数据进行测试查看输出效果:

import random

from transformers import AutoModelForCausalLM, AutoTokenizer

def generate_random_ip():

return f"{random.randint(0, 255)}.{random.randint(0, 255)}.{random.randint(0, 255)}.{random.randint(0, 255)}"

# 一些示例自然语言查询

queries = [

"查询源IP为{sip}和目的IP为{dip}的日志。",

"我想知道从{sip}到{dip}的日志信息。",

"请帮我查询源IP是{sip},目的IP是{dip}的日志。",

"查找源IP为{sip}的日志,并且目的IP为{dip}。",

"请查询从IP地址{sip}到{dip}的日志。",

"我要查看源IP为{sip}和目的IP为{dip}的所有日志记录。",

"帮我检索源IP是{sip},目的IP是{dip}的日志。",

"找出源IP为{sip}且目的IP为{dip}的日志信息。"

]

# 生成测试提问数据

test_queries = []

for _ in range(10):

sip = generate_random_ip()

dip = generate_random_ip()

while sip == dip: # 确保源IP和目的IP不相同

dip = generate_random_ip()

natural_query = random.choice(queries).format(sip=sip, dip=dip)

test_queries.append(natural_query)

# 加载模型和分词器

tokenizer = AutoTokenizer.from_pretrained("qwen-1_8b-finetune", trust_remote_code=True)

model = AutoModelForCausalLM.from_pretrained("qwen-1_8b-finetune", device_map="auto", trust_remote_code=True).eval()

# 测试生成的提问数据

for query in test_queries:

response, history = model.chat(tokenizer, query, history=None)

print(f"Query: {query}")

print(f"Response: {response}n")返回:

Loading checkpoint shards: 100%|██████████| 2/2 [00:01<00:00, 1.08it/s]

Query: 帮我检索源IP是118.138.10.108,目的IP是115.63.53.56的日志。

Response: sip:(118.138.10.108) AND dip:(115.63.53.56)

Query: 查询源IP为217.167.253.203和目的IP为166.108.20.181的日志。

Response: sip:(217.167.253.203) AND dip:(166.108.20.181)

Query: 请查询从IP地址138.149.107.95到115.195.181.176的日志。

Response: sip:(138.149.107.95) AND dip:(115.195.181.176)

Query: 请帮我查询源IP是149.25.199.162,目的IP是158.68.186.202的日志。

Response: sip:(149.25.199.162) AND dip:(158.68.186.202)

Query: 找出源IP为165.32.119.78且目的IP为228.34.43.191的日志信息。

Response: sip:(165.32.119.78) AND dip:(228.34.43.191)

Query: 我想知道从249.12.227.60到122.81.63.32的日志信息。

Response: sip:(249.12.227.60) AND dip:(122.81.63.32)

Query: 我要查看源IP为192.31.154.245和目的IP为38.109.134.216的所有日志记录。

Response: sip:(192.31.154.245) AND dip:(38.109.134.216)

Query: 我要查看源IP为251.143.92.121和目的IP为168.47.14.181的所有日志记录。

Response: sip:(251.143.92.121) AND dip:(168.47.14.181)

Query: 查找源IP为83.161.218.0的日志,并且目的IP为59.58.86.28。

...

Query: 查询源IP为102.80.55.173和目的IP为43.45.194.13的日志。

Response: sip:(102.80.55.173) AND dip:(43.45.194.13)

Output is truncated. View as a scrollable element or open in a text editor. Adjust cell output settings...可以看到效果很好,输出内容完全正常且完美的进行了格式化。

接下来尝试更加复杂的语句。

生成500份训练数据:

import json

import random

def generate_random_ip():

return f"{random.randint(0, 255)}.{random.randint(0, 255)}.{random.randint(0, 255)}.{random.randint(0, 255)}"

def generate_random_port():

return random.randint(1, 65535)

def generate_random_dns_type():

return random.choice([0, 1])

def generate_random_string(length=8):

letters = "abcdefghijklmnopqrstuvwxyz"

return ''.join(random.choice(letters) for i in range(length))

def generate_random_md5():

return ''.join(random.choice("0123456789abcdef") for i in range(32))

# 字段及其注释

fields = {

"serial_num": "传感器序列号",

"access_time": "日志生成时间",

"sip": "源IP",

"sport": "源端口",

"dip": "目的IP",

"dport": "目的端口",

"dns_type": "DNS访问类型",

"host": "Host",

"host_md5": "Host字段对应的MD5值",

"addr": "地址资源",

"mx": "邮件交换记录",

"cname": "域名的规范名称",

"reply_code": "DNS响应结果状态"

}

# 生成数据集

data = []

for i in range(500):

field_values = {

"serial_num": generate_random_string(),

"access_time": generate_random_string(),

"sip": generate_random_ip(),

"sport": generate_random_port(),

"dip": generate_random_ip(),

"dport": generate_random_port(),

"dns_type": generate_random_dns_type(),

"host": generate_random_string(),

"host_md5": generate_random_md5(),

"addr": generate_random_ip(),

"mx": generate_random_string(),

"cname": generate_random_string(),

"reply_code": random.randint(0, 5)

}

# 确保源IP和目的IP不相同

while field_values["sip"] == field_values["dip"]:

field_values["dip"] = generate_random_ip()

# 随机选择查询字段组合

selected_fields = random.sample(list(field_values.items()), random.randint(2, 6))

natural_query_parts = []

tianyan_query_parts = []

for field, value in selected_fields:

natural_query_parts.append(f"{fields[field]}为{value}")

tianyan_query_parts.append(f"{field}:({value})")

# 根据提问内容随机确定使用 AND 或 OR

if random.random() > 0.5:

logical_operator = " AND "

natural_query = "和".join(natural_query_parts) + " 的日志。"

else:

logical_operator = " OR "

natural_query = "或".join(natural_query_parts) + " 的日志。"

tianyan_query = logical_operator.join(tianyan_query_parts)

conversations = [

{

"from": "user",

"value": natural_query

},

{

"from": "assistant",

"value": tianyan_query

}

]

data.append({

"id": f"identity_{i}",

"conversations": conversations

})

# 保存为JSONL文件

with open("tianyan_queries_dataset.jsonl", "w", encoding="utf-8") as f:

for entry in data:

json.dump(entry, f, ensure_ascii=False)

f.write('n')

print("数据集生成完毕并已保存为tianyan_queries_dataset.jsonl")

# 从JSONL文件读取数据并转换成所需格式

file_path = 'tianyan_queries_dataset.jsonl'

# 读取文件并解析JSON

data = []

with open(file_path, 'r', encoding='utf-8') as file:

for line in file:

data.append(json.loads(line))

# 解析对话数据

all_chat = []

for entry in data:

conversations = entry["conversations"]

user = next(item["value"] for item in conversations if item["from"] == "user")

assistant = next(item["value"] for item in conversations if item["from"] == "assistant")

chat = {

"id": entry["id"],

"conversations": [

{"from": "user", "value": user},

{"from": "assistant", "value": assistant}

]

}

all_chat.append(chat)

# 保存为所需格式的JSON文件

output_file_path = 'chat.json'

with open(output_file_path, 'w', encoding='utf-8') as json_file:

json.dump(all_chat, json_file, ensure_ascii=False, indent=4)

print("数据集生成完毕并已保存为chat.json")返回:

数据集生成完毕并已保存为tianyan_queries_dataset.jsonl

数据集生成完毕并已保存为chat.json然后重复进行上述的训练和合并,对训练结果进行测试:

import json

import random

def generate_random_ip():

return f"{random.randint(0, 255)}.{random.randint(0, 255)}.{random.randint(0, 255)}.{random.randint(0, 255)}"

def generate_random_port():

return random.randint(1, 65535)

def generate_random_dns_type():

return random.choice([0, 1])

def generate_random_string(length=8):

letters = "abcdefghijklmnopqrstuvwxyz"

return ''.join(random.choice(letters) for i in range(length))

def generate_random_md5():

return ''.join(random.choice("0123456789abcdef") for i in range(32))

# 字段及其注释

fields = {

"serial_num": "传感器序列号",

"access_time": "日志生成时间",

"sip": "源IP",

"sport": "源端口",

"dip": "目的IP",

"dport": "目的端口",

"dns_type": "DNS访问类型",

"host": "Host",

"host_md5": "Host字段对应的MD5值",

"addr": "地址资源",

"mx": "邮件交换记录",

"cname": "域名的规范名称",

"reply_code": "DNS响应结果状态"

}

# 生成数据集

data = []

test_queries = []

for i in range(10): # 数据量可以根据需求调整

field_values = {

"serial_num": generate_random_string(),

"access_time": generate_random_string(),

"sip": generate_random_ip(),

"sport": generate_random_port(),

"dip": generate_random_ip(),

"dport": generate_random_port(),

"dns_type": generate_random_dns_type(),

"host": generate_random_string(),

"host_md5": generate_random_md5(),

"addr": generate_random_ip(),

"mx": generate_random_string(),

"cname": generate_random_string(),

"reply_code": random.randint(0, 5)

}

# 确保源IP和目的IP不相同

while field_values["sip"] == field_values["dip"]:

field_values["dip"] = generate_random_ip()

# 随机选择查询字段组合

selected_fields = random.sample(list(field_values.items()), random.randint(2, 6))

natural_query_parts = []

tianyan_query_parts = []

for field, value in selected_fields:

natural_query_parts.append(f"{fields[field]}为{value}")

tianyan_query_parts.append(f"{field}:({value})")

# 根据提问内容随机确定使用 AND 或 OR

if random.random() > 0.5:

logical_operator = " AND "

natural_query = "和".join(natural_query_parts) + " 的日志。"

else:

logical_operator = " OR "

natural_query = "或".join(natural_query_parts) + " 的日志。"

tianyan_query = logical_operator.join(tianyan_query_parts)

conversations = [

{

"from": "user",

"value": natural_query

},

{

"from": "assistant",

"value": tianyan_query

}

]

data.append({

"id": f"identity_{i}",

"conversations": conversations

})

test_queries.append(natural_query)

# 加载模型和分词器

tokenizer = AutoTokenizer.from_pretrained("qwen-1_8b-finetune", trust_remote_code=True)

model = AutoModelForCausalLM.from_pretrained("qwen-1_8b-finetune", device_map="auto", trust_remote_code=True).eval()

# 测试生成的提问数据

for query in test_queries:

response, history = model.chat(tokenizer, query, history=None)

print(f"Query: {query}")

print(f"Response: {response}n")返回:

Loading checkpoint shards: 100%|██████████| 2/2 [00:01<00:00, 1.18it/s]

Query: DNS响应结果状态为3或目的IP为23.251.209.49或地址资源为179.203.235.32或域名的规范名称为xmcjzndo或传感器序列号为mdetiwtm或Host为sgrsnftk 的日志。

Response: reply_code:(3) OR dip:(23.251.209.49) OR addr:(179.203.235.32) OR cname:(xmcjzndo) OR serial_num:(mdetiwtm) OR host:(sgrsnftk)

Query: 日志生成时间为tegfohqz和源IP为80.131.201.149 的日志。

Response: access_time:(tegfohqz) AND sip:(80.131.201.149)

Query: 地址资源为29.145.217.54或邮件交换记录为aacjgxnc 的日志。

Response: addr:(29.145.217.54) OR mx:(aacjgxnc)

Query: 传感器序列号为pujqiblw和地址资源为156.161.23.50 的日志。

Response: serial_num:(pujqiblw) AND addr:(156.161.23.50)

Query: DNS访问类型为0和传感器序列号为plktsqsm和目的IP为221.16.188.210和日志生成时间为hfllcizd和目的端口为58133和DNS响应结果状态为5 的日志。

Response: dns_type:(0) AND serial_num:(plksqsm) AND dip:(221.16.188.210) AND access_time:(hfllcizd) AND dport:(58133) AND reply_code:(5)

Query: Host字段对应的MD5值为3eed1d3dff0f60de7f4d6d05821f0b37或日志生成时间为vctsirgu或源IP为46.211.134.113或DNS响应结果状态为5 的日志。

Response: host_md5:(3eed1d3dff0f60de7f4d6d05821f0b37) OR access_time:(vctsirgu) OR sip:(46.211.134.113) OR reply_code:(5)

Query: 目的IP为52.78.87.125和DNS访问类型为1和地址资源为149.152.154.71和日志生成时间为fmslrlhl和DNS响应结果状态为4 的日志。

Response: dip:(52.78.87.125) AND dns_type:(1) AND addr:(149.152.154.71) AND access_time:(fmslrlhl) AND reply_code:(4)

Query: 目的IP为207.158.187.190和Host字段对应的MD5值为5763756879290cf47fddfa7250e6922a和邮件交换记录为ciakpqwh 的日志。

Response: dip:(207.158.187.190) AND host_md5:(5763756879290cf47fddfa7250e6922a) AND mx:(ciakpqwh)

Query: 传感器序列号为qhijdkgh和源IP为114.241.171.95 的日志。

...

Query: 目的IP为158.148.183.244或Host为yxmzzldo或DNS访问类型为1或地址资源为86.87.148.86 的日志。

Response: dip:(158.148.183.244) OR host:(yxmzzldo) OR dns_type:(1) OR addr:(86.87.148.86)

Output is truncated. View as a scrollable element or open in a text editor. Adjust cell output settings...数据集是脚本生成,所以在表达上有些生硬,但是结果完全正确。

发表回复